Download as PDF

Download as PDF

Participatory assessment step by step

There are different ways of operationalising a participatory approach. Drawing from SDC’s and its partners’ experience, a participatory assessment can be operationalised through the seven steps described below.

See activity chart (per tasks & roles):

Step 1: Choosing the PA as a methodology and defining a clear purpose and scope | - Scoping workshop

- Recruitment of facilitator

| | | | | | General concept defined (purpose, scope, methodology, process frame) Resources allocated |

Step 2: Conceptual planning and kick off | - Defining roles

- Designing process

- Communication with stakeholders

|

|

- X

|

| | | Definition of target groups and peers, Facilitation team established |

Step 3: Training, piloting and validating the method and tools | - Capacity building workshop

- Pilot

- Refining methodology

|

|

|

|

|

| Interlocutors prepared Methodologies finalised |

Step 4: Data collection

| - Organising and conducting interviews

- Reflection sessions

|

|

|

|

| | Raw data set |

Step 5: Data analysis and validation | - Triangulation

Validation workshop

Analysis Synthesis

|

| | | | | Consolidated data set Analysis |

Step 6: Documentation, systematisation, sharing results | - Drafting report

- Sharing and discussing findings

|

|

|

|

| | Synthesis report |

Step 7: Learning and implementing | |

|

|

|

|

| Management response Summary of PA learnings

|

XX Main responsibility, lead

X……Co-responsibility, involvement

(X) Possible involvement, according to needs

There is no fixed blueprint, however. While the previous page has provided some examples illustrating different approaches, no templates are provided here as they might be misleading and not take the set purpose and context sufficiently into account. The process must be tailor-made based on the considerations that are further explained

here.

Choosing the PA as a methodology and defining a clear purpose and scope

Key questions to answer: What is the assessment's purpose? What will we use the results for? Is the PA the appropriate methodology in light of the programme and its specifics? Do we have the necessary (human and financial) resources? How are we framing the process?

Responsibility: The programme funder and/or implementer

Tasks/outputs:

- Analyse context, logframe and programme conditions.

- Develop a general concept (scope, purpose, general approach and process steps, distribution of roles and tasks, risks to be aware of, timeline).

- Make human and financial resources available, budgeting the process.

- Recruit a facilitator who will be mainly responsible for the specific process design, managing the data collection and analysing the results.

- Organise and conduct a scoping workshop with key partners and a resource person that is familiar with the PA methodology (if needed), to confirm and fine-tune the purpose, build a common understanding of the conceptual approach and the process.

Additional recommendations and resources:

Possible purposes

What are possible purposes for a participatory assessment? Some examples:

- Getting a systemic understanding of the factors that shape the target groups' choices.

- Finding out whether the prodoc's assumptions and the theory of change reflect the realities that the target groups are living in. For example, verifying the ToC 'Better education leads to sustainable integration of the targeted individuals in the economic development of the region', or 'increasing the competitiveness of farmers and small enterprises by facilitating changes in services, inputs and product markets will lead to increased income for poor men and women in rural areas.'

- Understanding the needs and aspirations of target groups with a view to tailoring the objectives of an intervention.

- Understanding the various levels of effects and results that the intervention might have had, or its contribution to change in the lives of target groups. This could focus on various areas linked to the logframe and beyond (e.g. safety and security, gender equality, access to basic services, etc.).

- Understanding the homogeneity or heterogeneity of target groups, with regard to a planned intervention.

- Assessing the appropriateness of the monitoring system (indicators, sources of information, methodologies) and project approaches.

Criteria which make a PA particularly appropriate (e.g. long term relation with target group)

A PA is particularly appropriate if and when:

- It is assumed that the voice of beneficiaries for whom the programme is designed has not been heard clearly or loudly enough. By listening to the target groups, views, values and beliefs, it can be expected that the specific socio-cultural dimension of the intervention will be improved.

- Other monitoring and evaluation approaches focused on quantitative rather than qualitative indicators.

- There is a strong interest in learning about the target groups, about their diversity and how it is mirrored in the programme, and in deepening the understanding of the context through a broadening of perspectives.

- A genuine interest in challenging the programme's assumption of how the systemic interactions between the programme and the context can be described. A programme manager summed up the concept of ToC brilliantly: “With all the knowledge I have about the context, the ToC is what I would have been betting on to be effective."

- The programme is conducted in various regions representing different contexts, e.g. more or less exposed to violence or conflict. A comparison of the respective ToC and theories of action (ToA) allows for more specific adaptation of the programme.

- A long-term relationship with the target groups makes it worth investing in empowerment and ownership through participation.

- Ongoing cooperation between, organisers, implementer and the stakeholders of the programme make it worth investing in building and strengthening relationships and organisational learning.

- The programme is expected to improve on responsiveness, to make it more demand led and poverty oriented and clarify the accountability of different stakeholders to the target groups.

Examples for the objectives of a scoping workshop

Example for the objectives and expected results of a scoping workshop.

The design and length of the workshop depends on the invited participants and their expectations, the number of participants and the possible preparatory steps preceding the workshop. In some cases the 'national facilitator' has been selected before the scoping workshop to allow for a more in-depth reflection on the adaptations to the methodology.

Objectives of the workshop:

Clarity among all involved stakeholders on the PA as an assessment and the necessary planning and decision making in relation with the PA

Expected results of the workshop:

- The PA methodology is understood and the adaptations for the PA for the specific programme are clear

- Methodology selected

- Scope, purpose and framework of the PA established

- Understanding established of the framing and set-up of the PA

- Different PA options developed

- Concrete planning steps for the PA established

- Selection criteria established for national facilitator

Conceptual planning and kick off

Key questions: How do we design the process in detail? What target groups will be involved? Which peers will facilitate and accompany the data collection in the field, and how? Who will support the facilitator in their tasks? What profiles and capacities are required in the facilitator's team? Which target groups and individuals to include? How do we recruit them and gather their viewpoints?

Responsibility: Organisers and facilitator

Tasks/outputs:

- Recruit the facilitator's team.

- Fine-tune the roles of the process actors, methodology for data collection, documentation, analysis and validation, in the light of the purpose: who, when, where, what, how.

- Plan for feed-back loops for managing risks, learning and steering throughout the process.

- Define criteria for selecting and identifying interlocutors, recruiting peer assessors.

- Develop practical tools (communication with and messaging for stakeholders, target groups and peers, instructions for facilitators, interview guides, questionnaires, reporting formats, monitoring tools to monitor the assessment process).

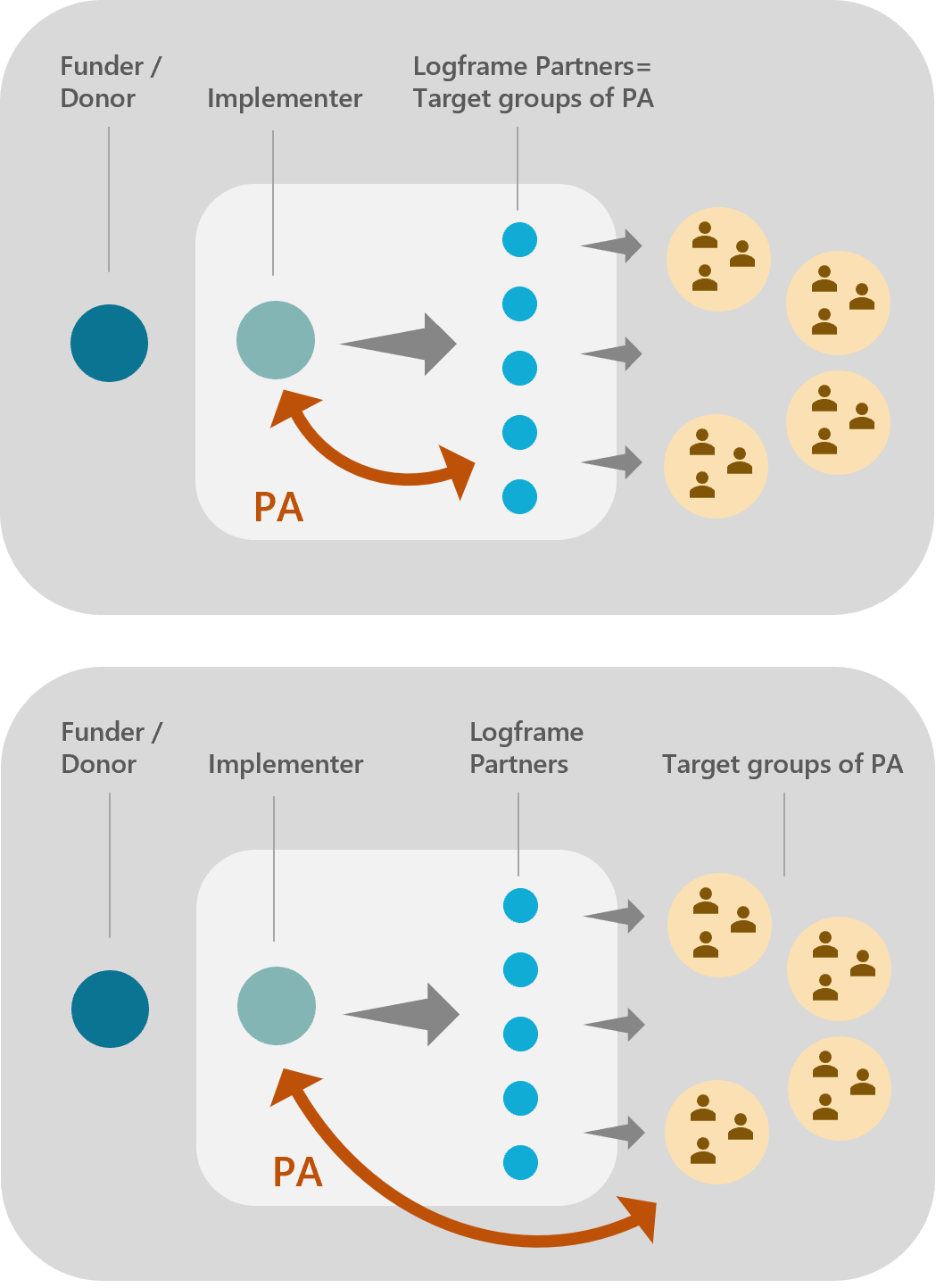

Things to consider when identifying interlocutors

- Interventions target different stakeholders and groups. The SDC’s programmes and projects often support partner institutions that are expected to have an impact on the lives of individuals (‘end beneficiaries’). Thus, the logframe expects outputs and outcomes mainly at the level of the targeted institutions – with some general expectations at the level of impact on citizens. If and when the funder directly involves ‘end beneficiaries’ as target groups in a participatory assessment, it may be going beyond the direct targets of the logframe system – and that comes with some challenges: who is learning what from the assessment? Are we empowering our intervention partners or a group of citizens (the target groups of our assessment) by giving them the power to assess the performance of our partners? Are we really assessing the impact that might be attributed to the intervention? How much is the reality that is assessed by the end beneficiaries linked to our intervention at all?

- Working with institutions as target groups comes with specific challenges in terms of participatory assessments: Who represents the institution? Who is considered a ‘peer’? Who would be acceptable and independent enough as a ‘peer’ interlocutor? What is the relationship between the two? How do you distinguish between the opinion of the individual representatives and the organisational viewpoint? How do the opinions depend on political power relations and interest? How sustainable is the qualitative data from the interviews? E.g. will it change after elections? All these questions merit in-depth political analysis and a systemic understanding of the institutional set-up.

- Examples for terms of reference of the facilitator and co-facilitator (link to examples for ToRs)

Training, piloting and validating the method and tools

Key questions: What will be the concrete role of 'peers'? How do we build a common understanding with selected target groups and peers, with a wider circle of stakeholders? How do we assess and build the competences of peer assessors? What are the topics and questions for the exchange between targeted individuals and peers? How do we document their insights? Does the approach work in practice?

Responsibility: Facilitator, together with organisers

Tasks/outputs

- Frame, organise and programme the collection of data (inviting target groups and peers, deciding on methods and approaches to use, logistics, reporting).

- Prepare and share information with local stakeholders.

- Hold capacity-building workshop with peer interviewers, develop and adapt key questions to their needs and perspectives.

- Test and refine the approach in a pilot with selected target groups and peers, validating data collection approaches and questionnaires and re-formulating messages and questions if needed.

- Fine-tune and validate the approach after piloting, together with organisers.

Additional recommendation and resources

- Data collection may be organised in a great variety of forms and formats, from individual interviews to focus group discussions, in presence, by phone calls or virtual means. The selection of the adequate methodology needs careful reflection and taking into account the context, particularly the conflict and power dimensions.

- The way a facilitator interacts and communicates with the local stakeholders will differ from the way the implementing partner is used to work, due to their different roles. Therefore, it is important that the facilitator and the implementing organisation have clearly defined roles and are able to cooperate well in order to organise the field visits efficiently and effectively.

- The collaboration can only be successful if there is a mutual comprehension that the assessment serves organisational learning of the funder and the implementer. The intention of the PA is not to discover the 'errors' of the implementer but to bring new insights to the process. At the same time there is a certain dependence (and power relation) between the implementer and the donor that should not be taboo. This is challenging if the PA is preparing for a next phase that will be tendered out. While the implementer should be part of the learning process, according to the rules on public tendering, they should not be especially involved.

- The field visits need thorough planning, anticipation of problems, a follow-up in the field, and flexibility, taking into account conflict risks and power relations between partner institutions and individual beneficiaries. All adaptations to a specific context should be documented, to enhance the transparency of the process and make it easier to interpret data.

- Different axes of empowerment for peers:

Graph: Miseli, Fabrice Escot, Avril 2021, Miseli - BuCo - Analyse PENF BA light - Pilote Sikasso 21 04

Data collection

Key questions: Are the target groups' views being collected and documented as planned? What do peers need in terms of support? What are the upcoming challenges and risks, and how will you deal with them?

Responsibility: Facilitator and his/her team, with the support of the organisers

Tasks/outputs:

- Inform identified target groups and other stakeholders about the expected process, methodology and results.

- Train peers in their pre-established role and methodology.

- Organise meeting places and logistics.

- Create regular space for frequent reflection on the process (with peers, among facilitators, with organisers).

- Facilitate and support data collection and reporting.

Additional recommendation and resources

Different views should be reflected as fairly as possible in all aspects of the PA process: design, data generation, analysis and communication of findings and recommendations, bearing in mind possible risks for different groups. Possible bias of participants, distortion or lack of ownership should be reflected on and documented, explicitly explaining the extent to which it was possible to meet PA principles in the report or documentation.

With the purpose of better contextualising the collected data, a ‘mini-questionnaire’ for the peers asking about certain aspects of their socio-economic context may prove helpful.

Finding out about the rationale of the peers when selecting and phrasing their questions to the interlocutors may create more clarity for the subsequent interpretation.

A regular, even daily exchange among facilitators and peer interviewers (possibly with organisers) is needed to adapt the approach and data collection programme to the evolving context, upcoming needs and risks. This has proven to help the facilitator develop a more thorough understanding of the process and its results.

Data analysis and validation

Key questions: How to analyse the raw information and synthesise the findings from collected data? Which biases might have influenced the data collection, and which biases could influence the data analysis? What are the key results to communicate? How to validate the findings from the perspective of target groups and peers and from the perspective of programme management and funders? What to conclude from the findings?

Responsibility: Facilitator and his/her team

Tasks/outputs:

- Collect the reports from data collection.

- Triangulate the data, analyse and synthesise the findings, draw conclusions in relation to the purpose of the assessment and take into account the possible influence of context factors on the data (such as violent conflicts in the community, or power relations between different target groups).

- Discuss and validate the findings with interlocutors, peers and/or experts, partners, management, funders.

- Refine the synthesis and conclusions.

Additional recommendation and resources

- For the validation of the data it is worthwhile to design methodological formats that integrate the peers in this reflexion. (To be added: link to different examples/story (written, video) for reflexion with peers)

- Example for objectives of validation workshop (To be added: link to different examples/story (written, video) for reflexion with peers)

Documentation, systematisation, sharing results

Key questions: What form of documentation and reporting of results is most useful in light of the purpose of the assessment? How did the planned process work out? Was the selection of interlocutors from target groups and peers appropriate? What were the challenges faced by the facilitators? What factors have been considered when interpreting the results? What is the information to share and communicate as a result, and with whom?

Responsibility: Facilitator

Tasks/outputs:

- Documenting the collected and validated data, analysing the process and (self-critically) identifying the challenges that could influence the results.

- Producing a synthesis report in line with the purpose of the assessment, with interpreted results and conclusions in light of the challenges.

- Sharing and discussing results with organisers, project management, programme partners, according to the set communication strategy.

Additional recommendation and resources

Important considerations for synthesis report

Synthesizing results needs to be based on and framed by the purpose of the assessment and the assessment questions. The process will produce a series of stories told by target groups and peers, from a variety of perspectives and in different forms and terminology. The results must be summarised from the perspective of the facilitator, contextualised (related to the context of the target groups and that of the assessment process), and then analysed, with a view to providing conclusions and recommendations for the organiser. The authors will inevitably incorporate their perspectives (incl. biases). The analytical framework and biases should be made visible as far as possible.

Beyond the conclusions and recommendations for the management, the synthesis report should contain information about the assessment process and the impact of the specific process design (effects of empowerment, individual and institutional learning, change of relationships) – and reflections on the assessment process itself.

Example of synthesis report, with analysis of the assessment process:

(Different examples/story, written, video will be published here)

Learning and implementing

Key questions: What does the organiser's institution take from the assessment results? Do the results question our theories of change? Theories of action? Strategic orientation? Partners, approaches and methods? How will the intervention be adapted? Where is there inter-institutional learning – between funder, implementer and partners? Personal learning by staff? Who should learn and understand what? Who is motivated to learn? How can you focus the effort of learning and communicating beyond the operational adaptation of programmes? How can interlocutors (target groups and peers) be informed about the results, so that they can see that the management responds to their views and their contribution has been taken seriously?

Responsibility: Organisers (funders and programme implementers)

Tasks/outputs

- Understand, integrate and interpret results in the light of the purpose and the process.

- Take and implement management decisions (management responses).

- Communicate decisions.

- Document learning, capitalise on and share experience from the process, in written or multimedia formats.

- Share results with interlocutors (target groups, peers, stakeholders) about the results and the management responses taken.

Additional recommendation and resources

- The design of the learning process needs to reflect the key question: Who is learning what to which purpose? It will take different steps to gather learnings from individual stakeholders and transfer individual learnings to a collective and an organisational level.

- Examples for learnings and capitalisation (To be added: link to examples)

- Examples of management decisions following a participatory assessment (To be added: link to examples)

| To get started with your participatory assessment, contact us: Stephanie Guha, stephanie.guha(at)eda.admin.ch Ursula König, uk(at)ximpulse.ch

|